Chi-square test (χ²): what is it and how is it used in statistics?

The chi-square test is a tool of descriptive statistics. Let's see how it is used.

In statistics, there are several tests to analyze the relationship between variables. Nominal variables are those that allow relationships of equality and inequality, such as gender.

In this article we will learn about one of the tests to analyze the independence between nominal or superordinate variables: the chi-square test, through the contrast of hypotheses (goodness-of-fit tests).

What is the chi-square test?

The chi-square test, also called Chi-square (Χ2)is one of the tests belonging to descriptive statistics, specifically descriptive statistics applied to the study of two variables. Descriptive statistics focuses on extracting information about the sample. In contrast, inferential statistics extracts information about the population.

The name of the test comes from the Chi-square probability distribution on which it is based. This test was was developed in 1900 by Karl Pearson..

The chi-square test is one of the best known and most widely used to analyze nominal or qualitative variables, i.e. to determine the existence or not of independence between two variables. That two variables are independent means that they have no relationship, and therefore one does not depend on the other, nor vice versa.

Thus, with the study of independence, a method also originates to verify whether the frequencies observed in each category are compatible with the independence between the two variables.

How is independence between variables obtained?

To evaluate the independence between the variables, the values that would indicate absolute independence are calculated, which are called "expected frequencies", by comparing them with the frequencies of the sample.

As usual, the null hypothesis (H0) indicates that both variables are independent, while the alternative hypothesis (H1) indicates that the variables have some degree of association or relationship.

Correlation between variables

Thus, like other tests for the same purpose, the chi-square test is used to see the direction of the correlation between variables. is used to see the direction of the correlation between two nominal or higher level variables (e.g., we can apply it if the variables have some degree of association or relationship). (for example, we can apply it if we want to know if there is a relationship between sex [being male or female] and the presence of anxiety [yes or no]).

To determine this type of relationship, there is a frequency table to consult (also for other tests such as Yule's Q coefficient).

If the empirical frequencies and the theoretical or expected frequencies coincide, then there is no relationship between the variables, i.e. they are independent. On the other hand, if they coincide, they are not independent (there is a relationship between the variables, for example between X and Y).

Considerations

The chi-square test, unlike other tests, does not establish restrictions on the number of modes per variable, and it is not necessary that the number of rows and the number of columns in the tables match..

However, it does need to be applied to studies based on independent samples, and when all the expected values are greater than 5. As we have already mentioned, the expected values are those that indicate absolute independence between the two variables.

In addition, to use the chi-square test, the level of measurement must be nominal or higher. It has no upper limit, i.e, does not allow us to know the intensity of the correlation.. In other words, the chi-square takes values between 0 and infinity.

On the other hand, if the sample increases, the chi-square value increases, but we must be cautious in its interpretation, because this does not mean that there is more correlation.

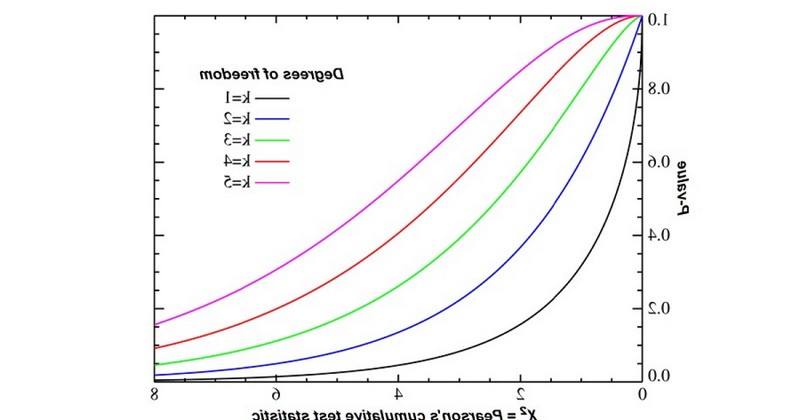

Chi-square distribution

The chi-square test test uses an approximation of the chi-square distribution to assess the probability of a discrepancy. to assess the probability of a discrepancy equal to or greater than that between the data and the expected frequencies according to the null hypothesis.

The accuracy of such an assessment will depend on the expected values not being too small, and to a lesser extent on the contrast between them not being too high.

Yates correction

The Yates correction is a mathematical formula that is applied with 2x2 tables and with a small theoretical frequency (less than 10) to correct for (less than 10), to correct for possible errors in the chi-square test.

Generally, the Yates correction or "continuity correction" is applied when a discrete variable approximates a continuous distribution when a discrete variable approximates a continuous distribution..

Hypothesis testing

In addition, the chi-square test belongs to the so-called goodness-of-fit tests or contrastswhich aim to decide whether the hypothesis that a given sample comes from a population with a probability distribution fully specified in the null hypothesis can be accepted.

The tests are based on the comparison of the observed frequencies (empirical frequencies) in the sample with those that would be expected (theoretical or expected frequencies) if the null hypothesis were true. Thus, the null hypothesis is rejected if there is a significant difference between the observed and expected frequencies.

Performance

As we have seen, the chi-square test is used with data belonging to a nominal scale or higher. From chi-square, we establish a null hypothesis that postulates a probability distribution specified as the mathematical model of the population that has generated the sample.

Once we have the hypothesis, we must perform the test, and for this we have the data in a table for this we have the data in a table of frequencies. The observed or empirical absolute frequency is indicated for each value or interval of values. Then, assuming that the null hypothesis is true, for each value or interval of values we calculate the absolute frequency that would be expected or expected frequency.

Interpretation

The chi-square statistic will take a value equal to 0 if there is perfect agreement between the observed and expected frequencies; conversely, the statistic will take a large value if there is a large discrepancy between these frequencies and the expected frequencies.and consequently the null hypothesis should be rejected.

Bibliographical references:

- Lubin, P. Macià, A. Rubio de Lerma, P. (2005). Psicología matemática I y II. Madrid: UNED.

- Pardo, A. San Martín, R. (2006). Análisis de datos en psicología II. Madrid: Pirámide.

(Updated at Apr 14 / 2024)